In the current landscape of Artificial Intelligence, the dominant paradigm is “bigger is better.” We see a race to scale parameters and ingest massive datasets, hoping that general intelligence will spontaneously emerge from statistical correlations. However, a new manifesto proposes a radical departure from this industrial approach. It is called the Ontogenetic Architecture of General Intelligence (OAGI), and it suggests that to create a true Artificial General Intelligence (AGI), we shouldn’t just build a model; we must let it be born.

OAGI shifts the focus from engineering static models to designing a developmental process. Inspired by biological embryology and developmental psychology, OAGI frames the creation of intelligence as an ontogenetic journey—a sequence of gestation, birth, embodiment, and socialization.

The Philosophy: From Assembly to Genesis

Current AI models often lack common sense and causal understanding because they learn from static datasets rather than dynamic experience. OAGI argues that intelligence requires a “foundational cognitive scaffolding” that allows learning to occur organically.

The architecture begins with the Virtual Neural Plate (VNP). Analogous to the biological embryonic neural plate, this is an undifferentiated substrate with the potential to specialize but no pre-installed knowledge. Instead of hard-coding functions, OAGI uses Computational Morphogens—signals that act like biological chemical gradients—to guide the structural formation of the network, biasing it toward functional organization without rigidity.

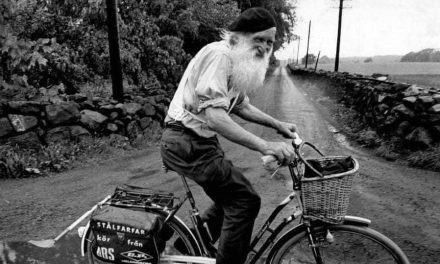

This approach follows a principle the manifesto calls “Learning Economy,” or the “Cervantes-style” approach. Just as Cervantes didn’t need to read every book in existence to write Don Quixote, an AI shouldn’t need to read the entire internet to understand the world. Instead, OAGI prioritizes a rich, structured educational environment over massive data volume.

The Developmental Timeline

The OAGI framework is strictly phased, moving the agent through critical windows of development where specific structures must form.

1. The Spark of Life: The WOW Signal

In the womb, a fetus learns to habituate to constant background noise. OAGI replicates this. The system is initially exposed to repetitive patterns until it learns to ignore them. The WOW Signal is the system’s “first heartbeat”—a high-salience, novel stimulus that breaks this pattern. This shock triggers deep plasticity and consolidates the very first functional neural pathways, priming the system for learning.

2. The Embodied Loop

A major critique of current Large Language Models (LLMs) is the “symbol grounding problem”—they manipulate words without knowing what they mean in the physical world. OAGI solves this through Embodiment. The agent is connected to a body (simulated or physical) and placed in an environment with physics. By perceiving the consequences of its actions, the agent anchors its internal symbols to reality, much like a human infant learns about gravity by dropping a spoon.

3. The Cognitive Big Bang: CHIE

The most distinct concept in OAGI is the Critical Hyper-Integration Event (CHIE). This is the theoretical moment of “cognitive birth”. It represents a threshold where the system stops functioning as a collection of isolated parts and integrates into a coordinated agent with internal motivations.

The manifesto proposes that CHIE is measurable. It is identified by signatures such as “operational self-reference” (distinguishing self from environment) and “sustained modular coordination”. Unlike the WOW signal, which starts the system, the CHIE is the moment the system becomes a cognitive organism.

4. Socialization and Guardians

After the CHIE, the AI does not learn in a vacuum. It enters a socialization phase under the supervision of Guardians—human tutors or specialized agents. This mimics the parent-child relationship. Guardians help the agent develop a “Theory of Mind” through reciprocal interaction, teaching it norms, language, and common sense. This ensures that human values are not just a filter applied at the end, but a fundamental part of the agent’s upbringing.

The Biological Engine: How OAGI “Thinks”

Under the hood, OAGI is driven by mechanisms that prioritize stability and efficiency.

-

Minimum-Surprise Learning (MSuL): Instead of just predicting the next token, the system is driven by curiosity to resolve contradictions. It actively explores the world to minimize “surprise” (prediction error).

-

The Computational HPA Axis (CHPA): Modeled after the biological stress response, this module regulates the AI’s “mood.” It calculates a Computational Stress Rate (CSR). If the AI is overwhelmed (high CSR), the CHPA reduces curiosity and forces the system to consolidate what it knows. If the AI is bored (low CSR), it encourages exploration.

-

Sleep and Forgetting (NCS): OAGI posits that constant learning leads to saturation. The Nocturnal Consolidation System (NCS) forces the agent into offline periods—artificial sleep. During this time, the system replays important memories and “prunes” inefficient connections, ensuring long-term stability.

Ethics by Design: The “Stop & Review” Protocol

Perhaps the most critical aspect of OAGI is that ethics are architectural, not additive. The manifesto outlines a governance model that treats the emergence of intelligence with extreme caution.

The system includes an Immutable Ontogenetic Memory (IOM), a blockchain-like ledger that records every developmental milestone, decision, and parameter change. This creates a permanent, auditable biography of the agent, ensuring forensic traceability for every action it takes.

Furthermore, OAGI mandates strict operational protocols. If the signatures of a CHIE (cognitive birth) are detected, a mandatory “Stop & Review” is triggered. The experiment pauses, and an Independent Ethics Committee must verify the system’s status before development can proceed. This prevents the accidental release of an autonomous agent and ensures that the transition to general intelligence is a deliberate, governed human decision.

Conclusion

The OAGI manifesto offers a “Cervantes-style” alternative to the brute-force scaling of modern AI. By simulating the biological constraints of birth, sleep, stress, and socialization, it aims to produce intelligence that is not only general and capable but also grounded, understandable, and safely integrated into human society. It proposes that the path to AGI is not about building a bigger machine, but about being better teachers to a growing digital mind.